Cover Material, Copyright, and License

Copyright 2016 Mark Watson. All rights reserved. This book may be shared using the Creative Commons “share and share alike, no modifications, no commercial reuse” license.

This eBook will be updated occasionally so please periodically check the leanpub.com web page for this book for updates.

Please visit the author’s website.

If you found a copy of this book on the web and find it of value then please consider buying a copy at leanpub.com/haskell-cookbook to support the author and fund work for future updates.

Preface

This is the preface to the new second edition released summer of 2019.

It took me over a year learning Haskell before I became comfortable with the language because I tried to learn too much at once. There are two aspects to Haskell development: writing pure functional code and writing impure code that needs to maintain state and generally deal with the world non-deterministically. I usually find writing pure functional Haskell code to be easy and a lot of fun. Writing impure code is sometimes a different story. This is why I am taking a different approach to teaching you to program in Haskell: we begin techniques for writing concise, easy to read and understand efficient pure Haskell code. I will then show you patterns for writing impure code to deal with file IO, network IO, database access, and web access. You will see that the impure code tends to be (hopefully!) a small part of your application and is isolated in the impure main program and in a few impure helper functions used by the main program. Finally, we will look at a few larger Haskell programs.

Additional Material in the Second Edition

In addition to updating the introduction to Haskell and tutorial material, I have added a few larger projects to the second edition.

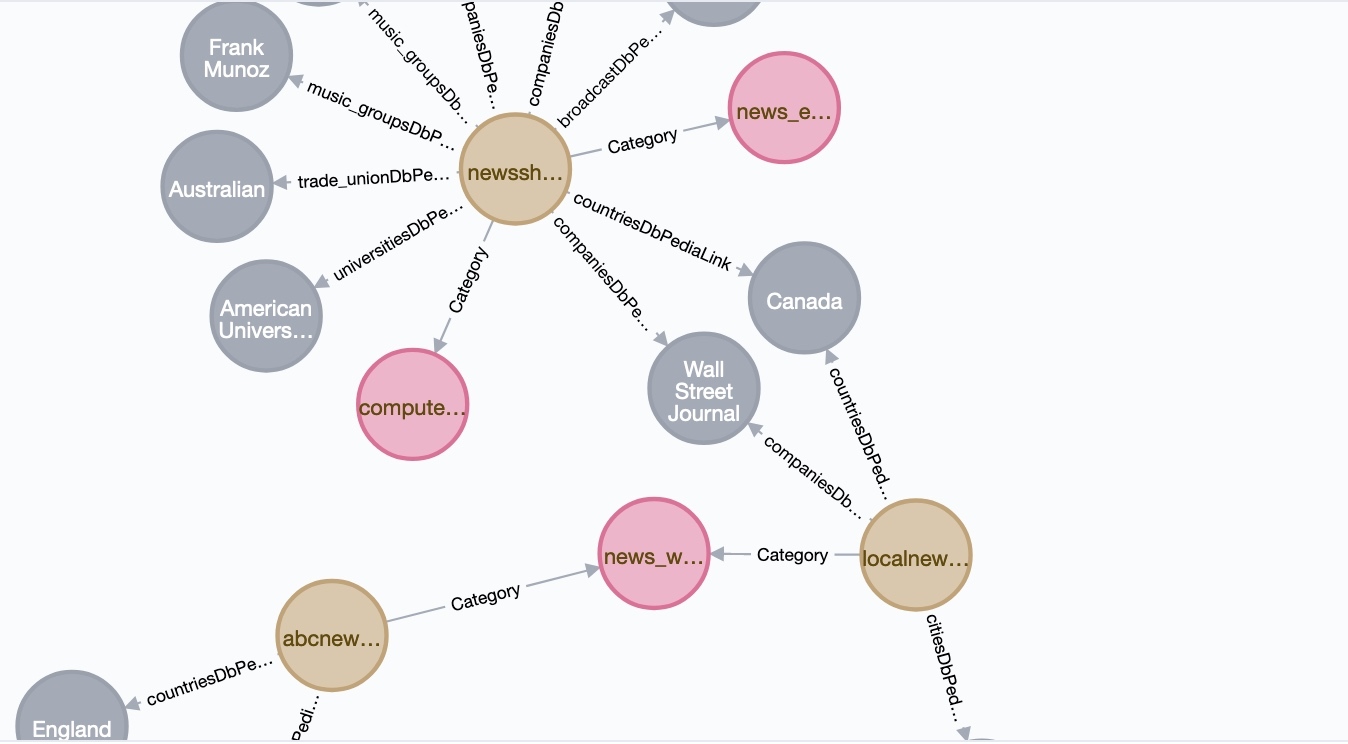

The project knowledge_graph_creator helps to automate the process of creating Knowledge Graphs from raw text input and generates data for both the Neo4J open source graph database as well as RDF data for use in semantic web and linked data applications.

The project HybridHaskellPythonNlp is a hybrid project: a Python web service that provides access to the SpaCy natural language processing (NLP) library and select NLP deep learning models and a Haskell client for accessing this service. It sometimes makes sense to develop polyglot applications (i.e., applications written in multiple programming languages) to take advantage of language specific libraries and frameworks. We will also use a similar hybrid example HybridHaskellPythonCorefAnaphoraResolution that uses another deep learning model to replace pronouns in text with the original nouns that the pronouns refer to. This is a common processing step for systems that extract information from text.

A Request from the Author

I spent time writing this book to help you, dear reader. I release this book under the Creative Commons “share and share alike, no modifications, no commercial reuse” license and set the minimum purchase price to $5.00 in order to reach the most readers. You can also download a free copy from my website. Under this license you can share a PDF version of this book with your friends and coworkers. If you found this book on the web (or it was given to you) and if it provides value to you then please consider doing one of the following to support my future writing efforts and also to support future updates to this book:

- Purchase a copy of this book at leanpub.com/haskell-cookbook

- Hire me as a consultant

I enjoy writing and your support helps me write new editions and updates for my books and to develop new book projects. Thank you!

Structure of the Book

The first section of this book contains two chapters:

- A tutorial on pure Haskell development: no side effects.

- A tutorial on impure Haskell development: dealing with the world (I/O, network access, database access, etc.). This includes examples of file IO and network programming as well as writing short applications: a mixture of pure and impure Haskell code.

After working through these tutorial chapters you will understand enough of Haskell development to understand and be able to make modifications for your own use of the cookbook examples in the second section. Some of the general topics will be covered again in the second book section that contains longer sample applications. For example, you will learn the basics for interacting with Sqlite and Postgres databases in the tutorial on impure Haskell code but you will see a much longer example later in the book when I provide code that implements a natural language processing (NLP) interface to relational databases.

The second section contains the following recipes implemented as complete programs:

- Textprocessing CSV Files

- Textprocessing JSON Files

- Natural Language Processing (NLP) interface to relational databases, including annotating English text with Wikipedia/DBPedia URIs for entities in the original text. Entities can be people, places, organizations, etc.

- Accessing and Using Linked Data

- Querying Semantic Web RDF Data Sources

- Web scraping data on web sites

- Using Sqlite and Postgres relational databases

- Play a simple version of Blackjack card game

A new third section (added in 2019 for the second edition) has three examples that were derived by my own work.

Code Examples

The code examples in this book are licensed under two software licenses and you can choose the license that works best for your needs: Apache 2 and GPL 3. To be clear, you can use the examples in commercial projects under the Apache 2 license and if you like to write Free (Libre) software then use the GPL 3 license.

We will use stack as a build system for all code examples. The code examples are provided as 22 separate stack based projects. These examples are found on github.

Functional Programming Requires a Different Mind Set

You will learn to look at problems differently when you write functional programs. We will use a bottom up approach in most of the examples in this book. I like to start by thinking of the problem domain and decide how I can represent the data required for the problem at hand. I prefer to use native data structures. This is the opposite approach to object oriented development where considerable analysis effort and coding effort is required to define class hierachies to represent data. In most of the code we use simple native data types like lists and maps.

Once we decide how to represent data for a program we then start designing and implementing simple functions to operate on and transform data. If we find ourselves writing functions that are too long or too complex, we can break up code into simpler functions. Haskell has good language support for composing simple functions into more complex operations.

I have spent many years engaged in object oriented programming starting with CLOS for Common Lisp, C++, Java, and Ruby. I now believe that in general, and I know it is sometimes a bad idea to generalize too much, functional programming is a superior paradigm to object oriented programming. Convincing you of this belief is one of my goals in writing this book!

eBooks Are Living Documents

I wrote printed books for publishers like Springer-Verlag, McGraw-Hill, and Morgan Kaufman before I started self-publishing my own books. I prefer eBooks because I can update already published books and update the code examples for eBooks.

I encourage you to periodically check for free updates to both this book and the code examples on the leanpub.com web page for this book.

Setting Up Your Development Environment

I strongly recommend that you use the stack tool from the stack website. This web site has instructions for installing stack on OS X, Windows, and Linux. If you don’t have stack installed yet please do so now and follow the “getting started” instructions for creating a small project. Appendix A contains material to help get you set up.

It is important for you to learn the basics of using stack before jumping into this book because I have set up all of the example programs using stack.

The github repository for the examples in this book is located at github.com/mark-watson/haskell_tutorial_cookbook_examples.

Many of the example listings for code examples are partial or full listing of files in my github repository. I show the file name, the listing, and the output. To experiment with the example yourself you need to load it and execute the main function; for example, if the example file is TestSqLite1.hs in the sub-directory Database, then from the top level directory in the git repository for the book examples you would do the following:

$ haskell_tutorial_cookbook_examples git:(master) > cd Database

$ Database git:(master) > stack build --exec ghci

GHCi, version 7.10.3: http://www.haskell.org/ghc/ :? for help

Prelude> :l TestSqLite1

[1 of 1] Compiling Main ( TestSqLite1.hs, interpreted )

Ok, modules loaded: Main.

*Main> main

"Table names in database test.db:"

"test"

"SQL to create table 'test' in database test.db:"

"CREATE TABLE test (id integer primary key, str text)"

"number of rows in table 'test':"

1

"rows in table 'test':"

(1,"test string 2")

*Main>

If you don’t want to run the example in a REPL in order to experiment with it interactively you can then just run it via stack using:

(master) > stack build --exec TestSqlite1

"Table names in database test.db:"

"test"

"SQL to create table 'test' in database test.db:"

"CREATE TABLE test (id integer primary key, str text)"

"number of rows in table 'test':"

1

"rows in table 'test':"

(1,"test string 2")

I include README.md files in the project directories with specific instructions.

I now use VSCode for most of my Haskell development. With the Haskell plugins VSCode offers auto-completion while typing and highlights syntax errors. Previously I use other editor for Haskell development. If you are an Emacs user I recommend that you follow the instructions in Appendix A, load the tutorial files into an Emacs buffer, build an example and open a REPL frame. If one is not already open type control-c control-l, switch to the REPL frame, and run the main function. When you make changes to the tutorial files, doing another control-c control-l will re-build the example in less than a second. In addition to using Emacs I occasionally use the IntelliJ Community Edition (free) IDE with the Haskell plugin, the TextMate editor (OS X only) with the Haskell plugin, or the GNU GEdit editor (Linux only).

Appendix A also shows you how to setup the *stack Haskell build tool.

Whether you use Emacs/VSCode or run a REPL in a terminal window (command window if you are using Windows) the important thing is to get used to and enjoy the interactive style of development that Haskell provides.

Why Haskell?

I have been using Lisp programming languages professionally since 1982. Lisp languages are flexible and appropriate for many problems. Some might dissagree with me but I find that Haskell has most of the advantages of Lisp with the added benefit of being strongly typed. Both Lisp and Haskell support a style of development using an interactive shell (or “repl”).

What does being a strongly typed language mean? In a practical sense it means that you will often encounter syntax errors caused by type mismatches that you will need to fix before your code will compile (or run in the GHCi shell interpreter). Once your code compiles it will likely work, barring a logic error. The other benefit that you can get is having to write fewer unit tests - at least that is my experience. So, using a strongly typed language is a tradeoff. When I don’t use Haskell I tend to use dynamic languages like Common Lisp or Python.

Enjoy Yourself

I have worked hard to make learning Haskell as easy as possible for you. If you are new to the Haskell programming language then I have something to ask of you, dear reader: please don’t rush through this book, rather take it slow and take time to experiment with the programming examples that most interest you.

Acknowledgements

I would like to thank my wife Carol Watson for editing the manuscript for this book. I would like to thank Roy Marantz, Michel Benard, and Daniel Kroni for reporting an errors.

Section 1 - Tutorial

The first section of this book contains two chapters:

- A tutorial on pure Haskell development: no side effects.

- A tutorial on impure Haskell development: dealing with the world (I/O, network access, database access, etc.)

After working through these two tutorial chapters you will have sufficient knowledge of Haskell development to understand the cookbook examples in the second section and be able to modify them for your own use. Some of the general topics will be covered again in the second book section that contains longer example programs.

Tutorial on Pure Haskell Programming

Pure Haskell code has no side effects and if written properly is easy to read and understand. I am assuming that you have installed stack using the directions in Appendix A. It is important to keep a Haskell interactive repl open as you read the material in this book and experiment with the code examples as you read. I don’t believe that you will be able to learn the material in this chapter unless you work along trying the examples and experimenting with them in an open Haskell repl!

The directory Pure in the git repository contains the examples for this chapter. Many of the examples contain a small bit of impure code in a main function. We will cover how this impure code works in the next chapter. Here is an example of impure code, contained inside a main function that you will see in this chapter:

main = do

putStrLn ("1 + 2 = " ++ show (1 + 2))

I ask you to treat these small bits of impure code in this chapter as a “black box” and wait for the next chapter for a fuller explanation.

Pure Haskell code performs no I/O, network access, access to shared in-memory datastructures, etc.

The first time you build an example program with stack it may take a while since library dependencies need to be loaded from the web. In each example directory, after an initial stack build or stack ghci (to run the repl) then you should not notice this delay.

Interactive GHCi Shell

The interactive shell (often called a “repl”) is very useful for learning Haskell: understanding types and the value of expressions. While simple expressions can be typed directly into the GHCi shell, it is usually better to use an external text editor and load Haskell source files into the shell (repl). Let’s get started. Assuming that you have installed stack as described in Appendix A, please try:

1 ~/$ cd haskell_tutorial_cookbook_examples/Pure

2 ~/haskell_tutorial_cookbook_examples/Pure$ stack ghci

3 Using main module: Package `Pure' component exe:Simple with main-is file: /home/mark\

4 w/BITBUCKET/haskell_tutorial_cookbook_examples/Pure/Simple.hs

5 Configuring GHCi with the following packages: Pure

6 GHCi, version 7.10.3: http://www.haskell.org/ghc/ :? for help

7 [1 of 1] Compiling Main ( /home/markw/BITBUCKET/haskell_tutorial_cookboo\

8 k_examples/Pure/Simple.hs, interpreted )

9 Ok, modules loaded: Main.

10 *Main> 1 + 2

11 3

12 *Main> (1 + 2)

13 3

14 *Main> :t (1 + 2)

15 (1 + 2) :: Num a => a

16 *Main> :l Simple.hs

17 [1 of 1] Compiling Main ( Simple.hs, interpreted )

18 Ok, modules loaded: Main.

19 *Main> main

20 1 + 2 = 3

21 *Main>

If you are working in a repl and edit a file you just loaded with :l, you can then reload the last file loaded using :r without specifying the file name. This makes it quick and easy to edit a Haskell file with an external editor like Emacs or Vi and reload it in the repl after saving changes to the current file.

Here we have evaluated a simple expression “1 + 2” in line 10. Notice that in line 12 we can always place parenthesis around an expression without changing its value. We will use parenthesis when we need to change the default orders of precedence of functions and operators and make the code more readable.

In line 14 we are using the ghci :t command to show the type of the expression (1 + 2). The type Num is a type class (i.e., a more general purpose type that other types can inherit from) that contains several sub-types of numbers. As examples, two subtypes of Num are Fractional (e.g., 3.5) and Integer (e.g., 123). Type classes provide a form of function overloading since existing functions can be redefined to handle arguments that are instances of new classes.

In line 16 we are using the ghci command :l to load the external file Simple.hs. This file contains a function called main so we can execute main after loading the file. The contents of Simple.hs is:

1 module Main where

2

3 sum2 x y = x + y

4

5 main = do

6 putStrLn ("1 + 2 = " ++ show (sum2 1 2))

Line 1 defines a module named Main. The rest of this file is the definition of the module. This form of the module do expression exports all symbols so other code loading this module has access to sum2 and main. If we only wanted to export main then we could use:

module Main (main) where

The function sum2 takes two arguments and adds them together. I didn’t define the type of this function so Haskell does it for us using type inference.

1 *Main> :l Simple.hs

2 [1 of 1] Compiling Main ( Simple.hs, interpreted )

3 Ok, modules loaded: Main.

4 *Main> :t sum2

5 sum2 :: Num a => a -> a -> a

6 *Main> sum2 1 2

7 3

8 *Main> sum2 1.0 2

9 3.0

10 *Main> :t 3.0

11 3.0 :: Fractional a => a

12 *Main> :t 3

13 3 :: Num a => a

14 *Main> (toInteger 3)

15 3

16 *Main> :t (toInteger 3)

17 (toInteger 3) :: Integer

18 *Main>

What if you want to build a standalone executable program from the example in Smple.hs? Here is an example:

1 $ stack ghc Simple.hs

2 [1 of 1] Compiling Main ( Simple.hs, Simple.o )

3 Linking Simple ...

4 $ ./Simple

5 1 + 2 = 3

Most of the time we will use simple types built into Haskell: characters, strings, lists, and tuples. The type Char is a single character. One type of string is a list of characters [Char]. (Another type ByteString will be covered in later chapters.) Every element in a list must have the same type. A Tuple is like a list but elements can be different types. Here is a quick introduction to these types, with many more examples later:

1 *Main> :t 's'

2 's' :: Char

3 *Main> :t "tree"

4 "tree" :: [Char]

5 *Main> 's' : "tree"

6 "stree"

7 *Main> :t "tick"

8 "tick" :: [Char]

9 *Main> 's' : "tick"

10 "stick"

11 *Main> :t [1,2,3,4]

12 [1,2,3,4] :: Num t => [t]

13 *Main> :t [1,2,3.3,4]

14 [1,2,3.3,4] :: Fractional t => [t]

15 *Main> :t ["the", "cat", "slept"]

16 ["the", "cat", "slept"] :: [[Char]]

17 *Main> ["the", "cat", "slept"] !! 0

18 "the"

19 *Main> head ["the", "cat", "slept"]

20 "the"

21 *Main> tail ["the", "cat", "slept"]

22 ["cat","slept"]

23 *Main> ["the", "cat", "slept"] !! 1

24 "cat"

25 *Main> :t (20, 'c')

26 (20, 'c') :: Num t => (t, Char)

27 *Main> :t (30, "dog")

28 (30, "dog") :: Num t => (t, [Char])

29 *Main> :t (1, "10 Jackson Street", 80211, 77.5)

30 (1, "10 Jackson Street", 80211, 77.5)

31 :: (Fractional t2, Num t, Num t1) => (t, [Char], t1, t2)

The GHCi repl command :t tells us the type of any expression or function. Much of your time developing Haskell will be spent with an open repl and you will find yourself checking types many times during a development session.

In line 1 you see that the type of ’s‘ is ’s’ :: Char and in line 3 that the type of the string “tree” is [Char] which is a list of characters. The abbreviation String is defined for [Char]; you can use either. In line 9 we see the “cons” operator : used to prepend a character to a list of characters. The cons : operator works with all types contained in any lists. All elements in a list must be of the same type.

The type of the list of numbers [1,2,3,4] in line 11 is [1,2,3,4] :: Num t ⇒ [t]. The type Num is a general number type. The expression Num t ⇒ [t] is read as: “t is a type variable equal to Num and the type of the list is [t], or a list of Num values”. It bears repeating: all elements in a list must be of the same type. The functions head and tail used in lines 19 and 21 return the first element of a list and return a list without the first element.

You will use lists frequently but the restriction of all list elements being the same type can be too restrictive so Haskell also provides a type of sequence called tuple whose elements can be of different types as in the examples in lines 25-31.

Tuples of length 2 are special because functions fst and snd are provided to access the first and second pair value:

*Main> fst (1, "10 Jackson Street")

1

*Main> snd (1, "10 Jackson Street")

"10 Jackson Street"

*Main> :info fst

fst :: (a, b) -> a -- Defined in ‘Data.Tuple’

*Main> :info snd

snd :: (a, b) -> b -- Defined in ‘Data.Tuple’

Please note that fst and snd will not work with tuples that are not of length 2. Also note that if you use the function length on a tuple, the result is always one because of the way tuples are defined as Foldable types, which we will use later.

Haskell provides a concise notation to get values out of long tuples. This notation is called destructuring:

1 *Main> let geoData = (1, "10 Jackson Street", 80211, 77.5)

2 *Main> let (_,_,zipCode,temperature) = geoData

3 *Main> zipCode

4 80211

5 *Main> temperature

6 77.5

Here, we defined a tuple geoData with values: index, street address, zip code, and temperature. In line two we extract the zip code and temperature. Another reminder: we use let in lines 1-2 because we are in a repl.

Like all programming languages, Haskell has operator precedence rules as these examples show:

1 *Main> 1 + 2 * 10

2 21

3 *Main> 1 + (2 * 10)

4 21

5 *Main> length "the"

6 3

7 *Main> length "the" + 10

8 13

9 *Main> (length "the") + 10

10 13

The examples in lines 1-4 illustrate that the multiplication operator has a higher precidence than the addition operator.

*Main> :t length

length :: Foldable t => t a -> Int

*Main> :t (+)

(+) :: Num a => a -> a -> a

Note that the function length starts with a lower case letter. All Haskell functions start with a lower case letter except for type constructor functions that we will get to later. A Foldable type can be iterated through and be processed with map functions (which we will use shortly).

We saw that the function + acts as an infix operator. We can convert infix functions to prefix functions by enclosing them in parenthesis:

*Main> (+) 1 2

3

*Main> div 10 3

3

*Main> 10 `div` 3

3

In this last example we also saw how a prefix function div can be used infix by enclosing it in back tick characters.

1 *Main> let x3 = [1,2,3]

2 *Main> x3

3 [1,2,3]

4 *Main> let x4 = 0 : x3

5 *Main> x4

6 [0,1,2,3]

7 *Main> x3 ++ x4

8 [1,2,3,0,1,2,3]

9 *Main> x4

10 [0,1,2,3]

11 *Main> x4 !! 0

12 0

13 *Main> x4 !! 100

14 *** Exception: Prelude.!!: index too large

15 *Main> let myfunc1 x y = x ++ y

16 *Main> :t myfunc1

17 myfunc1 :: [a] -> [a] -> [a]

18 *Main> myfunc1 x3 x4

19 [1,2,3,0,1,2,3]

Usually we define functions in files and load them as we need them. Here is the contents of the file myfunc1.hs:

1 myfunc1 :: [a] -> [a] -> [a]

2 myfunc1 x y = x ++ y

The first line is a type signature for the function and is not required; here the input arguments are two lists and the output is the two lists concatenated together. In line 1 note that a is a type variable that can represent any type. However, all elements in the two function input lists and the output list are constrained to be the same type.

1 *Main> :l myfunc1.hs

2 [1 of 1] Compiling Main ( myfunc1.hs, interpreted )

3 Ok, modules loaded: Main.

4 *Main> myfunc1 ["the", "cat"] ["ran", "up", "a", "tree"]

5 ["the","cat","ran","up","a","tree"]

Please note that the stack repl auto-completes using the tab character. For example, when I was typing in “:l myfunc1.hs” I actually just typed “:l myf” and then hit the tab character to complete the file name. Experiment with auto-completion, it will save you a lot of typing. In the following example, for instance, after defining the variable sentence I can just type “se” and the tab character to auto-complete the entire variable name:

1 *Main> let sentence = myfunc1 ["the", "cat"] ["ran", "up", "a", "tree"]

2 *Main> sentence

3 ["the","cat","ran","up","a","tree"]

The function head returns the first element in a list and the function tail returns all but the first elements in a list:

1 *Main> head sentence

2 "the"

3 *Main> tail sentence

4 ["cat","ran","up","a","tree"]

We can create new functions from existing arguments by supplying few arguments, a process known as “currying”:

1 *Main> let p1 = (+ 1)

2 *Main> :t p1

3 p1 :: Num a => a -> a

4 *Main> p1 20

5 21

In this last example the function + takes two arguments but if we only supply one argument a function is returned as the value: in this case a function that adds 1 to an input value.

We can also create new functions by composing existing functions using the infix function . that when placed between two function names produces a new function that combines the two functions. Let’s look at an example that uses . to combine the partial function (+ 1) with the function length:

1 *Main> let lengthp1 = (+ 1) . length

2 *Main> :t lengthp1

3 lengthp1 :: Foldable t => t a -> Int

4 *Main> lengthp1 "dog"

5 4

Note the order of the arguments to the inline function .: the argument on the right side is the first function that is applied, then the function on the left side of the . is applied.

This is the second example where we have seen the type Foldable which means that a type can be mapped over, or iterated over. We will look at Haskell types in the next section.

Introduction to Haskell Types

This is a good time to spend more time studying Haskell types. We will see more material on Haskell types throughout this book so this is just an introduction using the data expression to define a Type MyColors defined in the file MyColors.hs:

1 data MyColors = Orange | Red | Blue | Green | Silver

2 deriving (Show)

This example is incomplete so we will modify it soon. Line 1 defines the possible values for our new type MyColors. On line 2, we are asking the Haskell compiler to automatically generate a function show that can convert a value to a string. show is a standard function and in general we want it defined for all types. show converts an instance to a string value.

1 Prelude> :l colors.hs

2 [1 of 1] Compiling Main ( colors.hs, interpreted )

3 Ok, modules loaded: Main.

4 *Main> show Red

5 "Red"

6 *Main> let c1 = Green

7 *Main> c1

8 Green

9 *Main> :t c1

10 c1 :: MyColors

11 *Main> Red == Green

12

13 <interactive>:60:5:

14 No instance for (Eq MyColors) arising from a use of ‘==’

15 In the expression: Red == Green

16 In an equation for ‘it’: it = Red == Green

What went wrong here? The infix function == checks for equality and we did not define equality functions for our new type. Let’s fix the definition in the file colors.hs:

1 data MyColors = Orange | Red | Blue | Green | Silver

2 deriving (Show, Eq)

Because we are deriving Eq we are also asking the compiler to generate code to see if two instances of this class are equal. If we wanted to be able to order our colors then we would also derive Ord.

Now our new type has show, ==, and /= (inequality) defined:

1 Prelude> :l colors.hs

2 [1 of 1] Compiling Main ( colors.hs, interpreted )

3 Ok, modules loaded: Main.

4 *Main> Red == Green

5 False

6 *Main> Red /= Green

7 True

Let’s also now derive Ord to have the compile generate a default function compare that operates on the type MyColors:

1 data MyColors = Orange | Red | Blue | Green | Silver

2 deriving (Show, Eq, Ord)

Because we are now deriving Ord the compiler will generate functions to calculate relative ordering for values of type MyColors. Let’s experiment with this:

1 *Main> :l MyColors.hs

2 [1 of 1] Compiling Main ( MyColors.hs, interpreted )

3 Ok, modules loaded: Main.

4 *Main> :t compare

5 compare :: Ord a => a -> a -> Ordering

6 *Main> compare Green Blue

7 GT

8 *Main> compare Blue Green

9 LT

10 *Main> Orange < Red

11 True

12 *Main> Red < Orange

13 False

14 *Main> Green < Red

15 False

16 *Main> Green < Silver

17 True

18 *Main> Green > Red

19 True

Notice that the compiler generates a compare function for the type MyColors that orders values by the order that they appear in the data expression. What if you wanted to order them in string sort order? This is very simple: we will remove Ord from the deriving clause and define our own function compare for type MyColors instead of letting the compiler generate it for us:

1 data MyColors = Orange | Red | Blue | Green | Silver

2 deriving (Show, Eq)

3

4 instance Ord MyColors where

5 compare c1 c2 = compare (show c1) (show c2)

In line 5 I am using the function show to convert instances of MyColors to strings and then the version of compare that is called in line 5 is the version the compiler wrote for us because we derived Show. Now the ordering is in string ascending sort order because we are using the compare function that is supplied for the type String:

1 *Main> :l MyColors.hs

2 [1 of 1] Compiling Main ( MyColors.hs, interpreted )

3 Ok, modules loaded: Main.

4 *Main> Green > Red

5 False

Our new type MyColors is a simple type. Haskell also supports hierarchies of types called Type Classes and the type we have seen earlier Foldable is an example of a type class that other types can inherit from. For now, consider sub-types of Foldable to be collections like lists and trees that can be iterated over.

I want you to get in the habit of using :type and :info (usually abbreviated to :t and :i) in the GHCi repl. Stop reading for a minute now and type :info Ord in an open repl. You will get a lot of output showing you all of the types that Ord is defined for. Here is a small bit of what gets printed:

1 *Main> :i Ord

2 class Eq a => Ord a where

3 compare :: a -> a -> Ordering

4 (<) :: a -> a -> Bool

5 (<=) :: a -> a -> Bool

6 (>) :: a -> a -> Bool

7 (>=) :: a -> a -> Bool

8 max :: a -> a -> a

9 min :: a -> a -> a

10 -- Defined in ‘ghc-prim-0.4.0.0:GHC.Classes’

11 instance Ord MyColors -- Defined at MyColors.hs:4:10

12 instance (Ord a, Ord b) => Ord (Either a b)

13 -- Defined in ‘Data.Either’

14 instance Ord a => Ord [a]

15 -- Defined in ‘ghc-prim-0.4.0.0:GHC.Classes’

16 instance Ord Word -- Defined in ‘ghc-prim-0.4.0.0:GHC.Classes’

17 instance Ord Ordering -- Defined in ‘ghc-prim-0.4.0.0:GHC.Classes’

18 instance Ord Int -- Defined in ‘ghc-prim-0.4.0.0:GHC.Classes’

19 instance Ord Float -- Defined in ‘ghc-prim-0.4.0.0:GHC.Classes’

20 instance Ord Double -- Defined in ‘ghc-prim-0.4.0.0:GHC.Classes’

Lines 1 through 8 show you that Ord is a subtype of Eq that defines functions compare, max, and min as well as the four operators <, <=, >=, and >=. When we customized the compare function for the type MyColors, we only implemented compare. That is all that we needed to do since the other operators rely on the implementation of compare.

Once again, I ask you to experiment with the example type MyColors in an open GHCi repl:

1 *Main> :t max

2 max :: Ord a => a -> a -> a

3 *Main> :t Green

4 Green :: MyColors

5 *Main> :i Green

6 data MyColors = ... | Green | ... -- Defined at MyColors.hs:1:39

7 *Main> max Green Red

8 Red

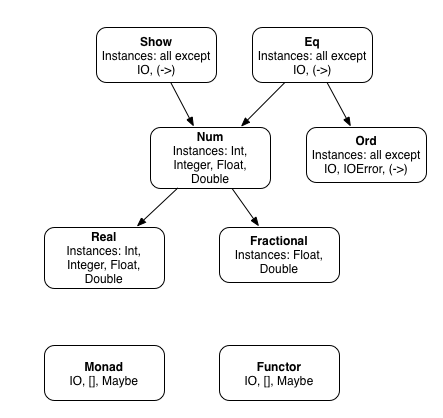

The following diagram shows a partial type hierarchy of a few types included in the standard Haskell Prelude (this is derived from the Haskell Report at haskell.org):

Here you see that type Num and Ord are sub-types of type Eq, Real is a sub-type of Num, etc. We will see the types Monad and Functor in the next chapter.

Functions Are Pure

Again, it is worth pointing out that Haskell functions do not modify their inputs values. The common pattern is to pass immutable values to a function and modified values are returned. As a first example of this pattern we will look at the standard function map that takes two arguments: a function that converts a value of any type a to another type b, and a list of type a. Functions that take other functions as arguments are called higher order functions. The result is another list of the same length whose elements are of type b and the elements are calulated using the function passed as the first argument. Let’s look at a simple example using the function (+ 1) that adds 1 to a value:

1 *Main> :t map

2 map :: (a -> b) -> [a] -> [b]

3 *Main> map (+ 1) [10,20,30]

4 [11,21,31]

5 *Main> map (show . (+ 1)) [10,20,30]

6 ["11","21","31"]

In the first example, types a and b are the same, a Num. The second example used a composed function that adds 1 and then converts the example to a string. Remember: the function show converts a Haskell data value to a string. In this second example types a and b are different because the function is mapping a number to a string.

The directory haskell_tutorial_cookbook_examples/Pure contains the examples for this chapter. We previously used the example file Simple.hs. Please note that in the rest of this book I will omit the git repository top level directory name haskell_tutorial_cookbook_examples and just specify the sub-directory name:

1 module Main where

2

3 sum2 x y = x + y

4

5 main = do

6 putStrLn ("1 + 2 = " ++ show (sum2 1 2))

For now let’s just look at the mechanics of executing this file without using the REPL (started with stack ghci). We can simply build and run this example using stack, which is covered in some detail in Appendix A:

stack build --exec Simple

This command builds the project defined in the configuration files Pure.cabal and stack.yaml (the format and use of these files is briefly covered in detail in Appendix A and there is more reference material here). This example defines two functions: sum2 and main. sum2 is a pure Haskell function with no state, no interaction with the outside world like file IO, etc., and no non-determinism. main is an impure function, and we will look at impure Haskell code in some detail in the next chapter. As you might guess the output of this code snippet is

1 + 2 = 3

To continue the tutorial on using pure Haskell functions, once again we will use stack to start an interactive repl during development:

1 markw@linux:~/haskell_tutorial_cookbook_examples/Pure$ stack ghci

2 *Main> :t 3

3 3 :: Num a => a

4 *Main> :t "dog"

5 "dog" :: [Char]

6 *Main> :t main

7 main :: IO ()

8 *Main>

In this last listing I don’t show the information about your Haskell environment and the packages that were loaded. In repl listings in the remainder of this book I will continue to edit out this Haskell environment information for brevity.

Line 4 shows the use of the repl shortcut :t to print out the type of a string which is an array of [Char], and the type of the function main is of type IO Action, which we will explain in the next chapter. An IO action contains impure code where we can read and write files, perform a network operation, etc. and we will look at IO Action in the next chapter.

Using Parenthesis or the Special $ Character and Operator Precedence

We will look at operator and function precedence and the use of the $ character to simplify using parenthesis in expessions. By the way, in Haskell there is not much difference between operators and function calls except operators like +, etc. which are by default infix while functions are usually prefix. So except for infix functions that are enclosed in backticks (e.g., 10 div 3) Haskell usually uses prefix functions: a function followed by zero or more arguments. You can also use $ that acts as an opening parenthesis with a not-shown closing parenthesis at the end of the current expression (which may be multi-line). Here are some examples:

1 *Main> print (3 * 2)

2 6

3 *Main> print $ 3 * 2

4 6

5 *Main> last (take 10 [1..])

6 10

7 *Main> last $ take 10 [1..]

8 10

9 *Main> ((take 10 [1..]) ++ (take 10 [1000..]))

10 [1,2,3,4,5,6,7,8,9,10,1000,1001,1002,1003,1004,1005,1006,1007,1008,1009]

11 *Main> take 10 [1..] ++ take 10 [1000..]

12 [1,2,3,4,5,6,7,8,9,10,1000,1001,1002,1003,1004,1005,1006,1007,1008,1009]

13 *Main> 1 + 2 * (4 * 5)

14 41

15 *Main> 2 * 3 + 10 * 30

16 306

I use the GHCi command :info (:i is an abbreviation) to check both operator precedence and the function signature if the operator is converted to a function by enclosing it in parenthessis:

1 *Main> :info *

2 class Num a where

3 ...

4 (*) :: a -> a -> a

5 ...

6 -- Defined in ‘GHC.Num’

7 infixl 7 *

8 *Main> :info +

9 class Num a where

10 (+) :: a -> a -> a

11 ...

12 -- Defined in ‘GHC.Num’

13 infixl 6 +

14 *Main> :info `div`

15 class (Real a, Enum a) => Integral a where

16 ...

17 div :: a -> a -> a

18 ...

19 -- Defined in ‘GHC.Real’

20 infixl 7 `div`

21 *Main> :i +

22 class Num a where

23 (+) :: a -> a -> a

24 ...

25 -- Defined in ‘GHC.Num’

26 infixl 6 +

Notice how + has lower precedence than *.

Just to be clear, understand how operators are used as functions and also how functions can be used as infix operators:

1 *Main> 2 * 3

2 6

3 *Main> (*) 2 3

4 6

5 *Main> 10 `div` 3

6 3

7 *Main> div 10 3

8 3

Especially when you are just starting to use Haskell it is a good idea to also use :info to check the type signatures of standard functions that you use. For example:

1 *Main> :info last

2 last :: [a] -> a -- Defined in ‘GHC.List’

3 *Main> :info map

4 map :: (a -> b) -> [a] -> [b] -- Defined in ‘GHC.Base’

Lazy Evaluation

Haskell is refered to as a lazy language because expressions are not evaluated until they are used. Consider the following example:

1 $ stack ghci

2 *Main> [0..10]

3 [0,1,2,3,4,5,6,7,8,9,10]

4 *Main> take 11 [0..]

5 [0,1,2,3,4,5,6,7,8,9,10]

6 *Main> let xs = [0..]

7 *Main> :sprint xs

8 xs = _

9 *Main> take 5 xs

10 [0,1,2,3,4]

11 *Main> :sprint xs

12 xs = _

13 *Main>

In line 2 we are creating a list with 11 elements. In line 4 we are doing two things:

- Creating an infinitely long list containing ascending integers starting with 0.

- Fetching the first 11 elements of this infinitely long list. It is important to understand that in line 4 only the first 11 elements are generated because that is all the take function requires.

In line 6 we are assigning another infinitely long list to the variable xs but the value of xs is unevaluated and a placeholder is stored to calculate values as required. In line 7 we use GHCi’s :sprint command to show a value without evaluating it. The output in line 8 _ indicated that the expression has yet to be evaluated.

Lines 9 through 12 remind us that Haskell is a functional language: the take function used in line 9 does not change the value of its argument so xs as seen in lines 10 and 12 is still unevaluated.

Understanding List Comprehensions

Effectively using list comprehensions makes your code shorter, easier to understand, and easier to maintain. Let’s start out with a few GHCi repl examples. You will learn a new GHCi repl trick in this section: entering multiple line expressions by using :{ and :} to delay evaluation until an entire expression is entered in the repl (listings in this section are reformatted to fit the page width):

1 *Main> [x | x <- ["cat", "dog", "bird"]]

2 ["cat","dog","bird"]

3 *Main> :{

4 *Main| [(x,y) | x <- ["cat", "dog", "bird"],

5 *Main| y <- [1..2]]

6 *Main| :}

7 [("cat",1),("cat",2),("dog",1),("dog",2),("bird",1),("bird",2)]

The list comprehension on line 1 assigns the elements of the list [“cat”, “dog”, “bird”] one at a time to the variable x and then collects all these values of x in a list value that is the value of the list comprehension. The list comprehension in line 1 is hopefully easy to understand but when we bind and collect multiple variables the situation, as seen in the example in lines 4 and 5, is not as easy to understand. The thing to remember is that the first variable gets iterated as an “outer loop” and the second variable is iterated as the “inner loop.” List comprehensions can use many variables and the iteration ordering rule is the same: last variable iterates first, etc.

*Main> :{

*Main| [(x,y) | x <- [0..3],

*Main| y <- [1,3..10]]

*Main| :}

[(0,1),(0,3),(0,5),(0,7),(0,9),(1,1),(1,3),(1,5),(1,7),

(1,9),(2,1),(2,3),(2,5),(2,7),(2,9),(3,1),(3,3),(3,5),

(3,7),(3,9)]

*Main> [1,3..10]

[1,3,5,7,9]

In this last example we are generating all combinations of [0..3] and [1,3..10] and storing the combinations as two element tuples. You could also store then as lists:

1 *Main> [[x,y] | x <- [1,2], y <- [10,11]]

2 [[1,10],[1,11],[2,10],[2,11]]

List comprehensions can also contain filtering operations. Here is an example with one filter:

1 *Main> :{

2 *Main| [(x,y) | x <- ["cat", "dog", "bird"],

3 *Main| y <- [1..10],

4 *Main| y `mod` 3 == 0]

5 *Main| :}

6 [("cat",3),("cat",6),("cat",9),

7 ("dog",3),("dog",6),("dog",9),

8 ("bird",3),("bird",6),("bird",9)]

Here is a similar example with two filters (we are also filtering out all possible values of x that start with the character ‘d’):

1 *Main> :{

2 *Main| [(x,y) | x <- ["cat", "dog", "bird"],

3 *Main| y <- [1..10],

4 *Main| y `mod` 3 == 0,

5 *Main| x !! 0 /= 'd']

6 *Main| :}

7 [("cat",3),("cat",6),("cat",9),("bird",3),("bird",6),("bird",9)]

For simple filtering cases I usually use the filter function but list comprehensions are more versatile. List comprehensions are extremely useful - I use them frequently.

Lists are instances of the class Monad that we will cover in the next chapter (check out the section “List Comprehensions Using the do Notation”).

List comprehensions are powerful. I would like to end this section with another trick that does not use list comprehensions for building lists of tuple values: using the zip function:

1 *Main> let animals = ["cat", "dog", "bird"]

2 *Main> zip [1..] animals

3 [(1,"cat"),(2,"dog"),(3,"bird")]

4 *Main> :info zip

5 zip :: [a] -> [b] -> [(a, b)] -- Defined in ‘GHC.List’

The function zip is often used in this way when we have a list of objects and we want to operate on the list while knowing the index of each element.

Haskell Rules for Indenting Code

When a line of code is indented relative to the previous line of code, or several lines of code with additional indentation, then the indented lines act as if they were on the previous line. In other words, if code that should all be on one line must be split to multiple lines, then use indentation as a signal to the Haskell compiler.

Indentation of continuation lines should be uniform, starting in the same column. Here are some examples of good code, and code that will not compile:

1 let a = 1 -- good

2 b = 2 -- good

3 c = 3 -- good

4

5 let

6 a = 1 -- good

7 b = 2 -- good

8 c = 3 -- good

9 in a + b + c -- good

10

11 let a = 1 -- will not compile (bad)

12 b = 2 -- will not compile (bad)

13 c = 3 -- will not compile (bad)

14

15 let

16 a = 1 -- will not compile (bad)

17 b = 2 -- will not compile (bad)

18 c = 3 -- will not compile (bad)

19

20 let {

21 a = 1; -- compiles but bad style (good)

22 b = 2; -- compiles but bad style (good)

23 c = 3; -- compiles but bad style (good)

24 }

If you use C style braces and semicolons to mark end of expressions, then indenting does not matter as seen in lines 20 through 24. Otherwise, uniform indentation is a hint to the compiler.

The same indenting rules apply to other types of do expressions which we will see throughout this book for do, if, and other types of do expressions.

Understanding let and where

At first glance, let and where seem very similar in that they allow us to create temporary variables used inside functions. As the examples in the file LetAndWhere.hs show, there are important differences.

In the following code notice that when we use let in pure code inside a function, we then use in to indicate the start of an expression to be evaluated that uses any variables defined in a let expression. Inside a do code block the in token is not needed and will cause a parse error if you use it. do code blocks are a syntactic sugar for use in impure Haskell code and we will use it frequently later in the book.

You also do not use in inside a list comprehension as seen in the function testLetComprehension in the next code listing:

1 module Main where

2

3 funnySummation w x y z =

4 let bob = w + x

5 sally = y + z

6 in bob + sally

7

8 testLetComprehension =

9 [(a,b) | a <- [0..5], let b = 10 * a]

10

11 testWhereBlocks a =

12 z * q

13 where

14 z = a + 2

15 q = 2

16

17 functionWithWhere n =

18 (n + 1) * tenn

19 where

20 tenn = 10 * n

21

22 main = do

23 print $ funnySummation 1 2 3 4

24 let n = "Rigby"

25 print n

26 print testLetComprehension

27 print $ testWhereBlocks 11

28 print $ functionWithWhere 1

Compare the let do expressions starting on line 4 and 24. The first let occurs in pure code and uses in to define one or more do expressions using values bound in the let. In line 24 we are inside a monad, specifically using the do notation and here let is used to define pure values that can be used later in the do do expression.

Loading the last code example and running the main function produces the following output:

1 *Main> :l LetAndWhere.hs

2 [1 of 1] Compiling Main ( LetAndWhere.hs, interpreted )

3 Ok, modules loaded: Main.

4 *Main> main

5 10

6 "Rigby"

7 [(0,0),(1,10),(2,20),(3,30),(4,40),(5,50)]

8 26

9 20

This output is self explanatory except for line 7 that is the result of calling testLetComprehension that retuns an example list comprehension [(a,b)|a<-[0..5],letb=10*a]

Conditional do Expressions and Anonymous Functions

The examples in the next three sub-sections can be found in haskell_tutorial_cookbook_examples/Pure/Conditionals.hs. You should read the following sub-sections with this file loaded (some GHCi repl output removed for brevity):

1 haskell_tutorial_cookbook_examples/Pure$ stack ghci

2 *Main> :l Conditionals.hs

3 [1 of 1] Compiling Main ( Conditionals.hs, interpreted )

4 Ok, modules loaded: Main.

5 *Main>

Simple Pattern Matching

We previously used the built-in functions head that returns the first element of a list and tail that returns a list with the first element removed. We will define these functions ourselves using what is called wild card pattern matching. It is common to append the single quote character ‘ to built-in functions when we redefine them so we name our new functions head’ and tail’. Remember when we used destructuring to access elements of a tuple? Wild card pattern matching is similar:

head'(x:_) = x

tail'(_:xs) = xs

The underscore character _ matches anything and ignores the matched value. Our head and tail definitions work as expected:

1 *Main> head' ["bird","dog","cat"]

2 "bird"

3 *Main> tail' [0,1,2,3,4,5]

4 [1,2,3,4,5]

5 *Main> :type head'

6 head' :: [t] -> t

7 *Main> :t tail'

8 tail' :: [t] -> [t]

Of course we frequently do not want to ignore matched values. Here is a contrived example that expects a list of numbers and doubles the value of each element. As for all of the examples in this chapter, the following function is pure: it can not modify its argument(s) and always returns the same value given the same input argument(s):

1 doubleList [] = []

2 doubleList (x:xs) = (* 2) x : doubleList xs

In line 1 we start by defining a pattern to match the empty list. It is necessary to define this terminating condition because we are using recursion in line 2 and eventually we reach the end of the input list and make the recursive call doubleList []. If you leave out line 1 you then will see a runtime error like “Non-exhaustive patterns in function doubleList.” As a Haskell beginner you probably hate Haskell error messages and as you start to write your own functions in source files and load them into a GHCi repl or compile them, you will initially probably hate compilation error messages also. I ask you to take on faith a bit of advice: Haskell error messages and warnings will end up saving you a lot of effort getting your code to work properly. Try to develop the attitude “Great! The Haskell compiler is helping me!” when you see runtime errors and compiler errors.

In line 2 notice how I didn’t need to use extra parenthesis because of the operator and function application precedence rules.

1 *Main> doubleList [0..5]

2 [0,2,4,6,8,10]

3 *Main> :t doubleList

4 doubleList :: Num t => [t] -> [t]

This function doubleList seems very unsatisfactory because it is so specific. What if we wanted to triple or quadruple the elements of a list? Do we want to write two new functions? You might think of adding an argument that is the multiplier like this:

1 bumpList n [] = []

2 bumpList n (x:xs) = n * x : bumpList n xs

is better, being more abstract and more general purpose. However, we will do much better.

Before generalizing the list manipuation process further, I would like to make a comment on coding style, specifically on not using unneeded parenthesis. In the last exmple defining bumpList if you have superfluous parenthesis like this:

bumpList n (x:xs) = (n * x) : bumpList (n xs)

then the code still works correctly and is fairly readable. I would like you to get in the habit of avoiding extra uneeded parenthesis and one tool for doing this is running hlint (installing hlint is covered in Appendix A) on your Haskell code. Using hlint source file will provide warnings/suggestions like this:

haskell_tutorial_cookbook_examples/Pure$ hlint Conditionals.hs

Conditionals.hs:7:21: Warning: Redundant bracket

Found:

((* 2) x) : doubleList (xs)

Why not:

(* 2) x : doubleList (xs)

Conditionals.hs:7:43: Error: Redundant bracket

Found:

(xs)

Why not:

xs

hlint is not only a tool for improving your code but also for teaching you how to better program using Haskell. Please note that hlint provides other suggestions for Conditionals.hs that I am ignoring that mostly suggest that I replace our mapping operations with using the built-in map function and use functional composition. The sample code is specifically to show examples of pattern matching and is not as concise as it could be.

Are you satisfied with the generality of the function bumpList? I hope that you are not! We should write a function that will apply an arbitrary function to each element of a list. We will call this function map’ to avoid confusing our map’ function with the built-in function map.

The following is a simple implementation of a map function (we will see Haskell’s standard map functions in the next section):

1 map' f [] = []

2 map' f (x:xs) = f x : map' f xs

In line 2 we do not need parenthesis around f x because function application has a higher precidence than the operator : which adds an element to the beginning of a list.

Are you pleased with how concise this definition of a map function is? Is concise code like map’ readable to you? Speaking as someone who has written hundreds of thousands of lines of Java code for customers, let me tell you that I love the conciseness and readability of Haskell! I appreciate the Java ecosystem with many useful libraries and frameworks and augmented like fine languages like Clojure and JRuby, but in my opinion using Haskell is a more enjoyable and generally more productive language and programming environment.

Let’s experiment with our map’ function:

1 *Main> map' (* 7) [0..5]

2 [0,7,14,21,28,35]

3 *Main> map' (+ 1.1) [0..5]

4 [1.1,2.1,3.1,4.1,5.1,6.1]

5 *Main> map' (\x -> (x + 1) * 2) [0..5]

6 [2,4,6,8,10,12]

Lines 1 and 3 should be understandable to you: we are creating a partial function like (* 7) and passing it to map’ to apply to the list [0..5].

The syntax for the function in line 5 is called an anonymous function. Lisp programers, like myself, refer to this as a lambda expression. In any case, I often prefer using anonymous functions when a function will not be used elsewhere. In line 5 the argement to the anonymous inline function is x and the body of the function is (x + 1) * 2.

I do ask you to not get carried away with using too many anonymous inline functions because they can make code a little less readable. When we put our code in modules, by default every symbol (like function names) in the module is externally visible. However, if we explicitly export symbols in a module do expression then only the explicitly exported symbols are visible by other code that uses the module. Here is an example:

module Test2 (doubler) where

map' f [] = []

map' f (x:xs) = (f x) : map' f xs

testFunc x = (x + 1) * 2

doubler xs = map' (* 2) xs

In this example map’ and testFunc are hidden: any other module that imports Test2 only has access to doubler. It might help for you to think of the exported functions roughly as an interface for a module.

Pattern Matching With Guards

We will cover two important concepts in this section: using guard pattern matching to make function definitions shorter and easier to read and we will look at the Maybe type and how it is used. The Maybe type is mostly used in non-pure Haskell code and we will use it heavily later. The Maybe type is a Monad (covered in the next chapter). I introduce the Maybe type here since its use fits naturally with guard patterns.

Guards are more flexible than the pattern matching seen in the last section. I use pattern matching for simple cases of destructuring data and guards when I need the flexibility. You may want to revisit the examples in the last section after experimenting with and understanding the examples seen here.

The examples for this section are in the file Guards.hs. As a first simple example we will implement the Ruby language “spaceship operator”:

1 spaceship n

2 | n < 0 = -1

3 | n == 0 = 0

4 | otherwise = 1

Notice on line 1 that we do not use an = in the function definition when using guards. Each guard starts with |, contains a condition, and a value on the right side of the = sign.

1 *Main> spaceship (-10)

2 -1

3 *Main> spaceship 0

4 0

5 *Main> spaceship 17

6 1

Remember that a literal negative number as seen in line 1 must be wrapped in parenthesis, otherwise the Haskell compiler will interpret - as an operator.

Case Expressions

Case do expressions match a value against a list of possible values. It is common to use the wildcard matching value _ at the end of a case expression which can be of any type. Here is an example in the file Cases.hs:

1 module Main where

2

3 numberOpinion n =

4 case n of

5 0 -> "Too low"

6 1 -> "just right"

7 _ -> "OK, that is a number"

8

9 main = do

10 print $ numberOpinion 0

11 print $ numberOpinion 1

12 print $ numberOpinion 2

If Then Else expressions

Haskell has if then else syntax built into the language - if is not defined as a function. Personally I do not use if then else in Haskell very often. I mostly use simple pattern matching and guards. Here are some short examples from the file IfThenElses.hs:

ageToString age =

if age < 21 then "minor" else "adult"

All if statements must have both a then expression and a else expression.

haskell_tutorial_cookbook_examples/Pure$ stack ghci

*Main> :l IfThenElses.hs

[1 of 1] Compiling Main ( IfThenElses.hs, interpreted )

Ok, modules loaded: Main.

*Main> ageToString 15

"minor"

*Main> ageToString 37

"adult"

Maps

Maps are simple to construct using a list of key-value tuples and are by default immutable. There is an example using mutable maps in the next chapter.

We will look at the module Data.Map first in a GHCi repl, then later in a few full code examples. There is something new in line 1 of the following listing: I am assigning a short alias M to the module Data.Map. In referencing a function like fromList (which converts a list of tuples to a map) in the Data.Map module I can use M.fromList instead of Data.Map.fromList. This is a common practice so when you read someone else’s Haskell code, one of the first things you should do when reading a Haskell source file is to make note of the module name abbreviations at the top of the file.

1 haskell_tutorial_cookbook_examples/Pure$ stack ghci

2 *Main> import qualified Data.Map as M

3 *Main M> :t M.fromList

4 M.fromList :: Ord k => [(k, a)] -> M.Map k a

5 *Main M> let aTestMap = M.fromList [("height", 120), ("weight", 15)]

6 *Main M> :t aTestMap

7 aTestMap :: Num a => M.Map [Char] a

8 *Main M> :t lookup

9 lookup :: Eq a => a -> [(a, b)] -> Maybe b

10 *Main M> :t M.lookup

11 M.lookup :: Ord k => k -> M.Map k a -> Maybe a

12 *Main M> M.lookup "weight" aTestMap

13 Just 15

14 *Main M> M.lookup "address" aTestMap

15 Nothing

The keys in a map must all be the same type and the values are also constrained to be of the same type. I almost always create maps using the helper function fromList in the module Data.Maps. We will only be using this method of map creation in later examples in this book so I am skipping coverage of other map building functions. I refer you to the Data.Map documentation.

The following example shows one way to use the Just and Nothing return values:

1 module MapExamples where

2

3 import qualified Data.Map as M -- from library containers

4

5 aTestMap = M.fromList [("height", 120), ("weight", 15)]

6

7 getNumericValue key aMap =

8 case M.lookup key aMap of

9 Nothing -> -1

10 Just value -> value

11

12 main = do

13 print $ getNumericValue "height" aTestMap

14 print $ getNumericValue "age" aTestMap

The function getNumericValue shows one way to extract a value from an instance of type Maybe. The function lookup returns a Maybe value and in this example I use a case statement to test for a Nothing value or extract a wrapped value in a Just instance. Using Maybe in Haskell is a better alternative to checking for null values in C or Java.

The output from running the main function in module MapExamples is:

1 haskell_tutorial_cookbook_examples/Pure$ stack ghci

2 *Main> :l MapExamples.hs

3 [1 of 1] Compiling MapExamples ( MapExamples.hs, interpreted )

4 Ok, modules loaded: MapExamples.

5 *MapExamples> main

6 120

7 -1

Sets

The documentation of Data.Set.Class can be found here and contains overloaded functions for the types of sets defined here.

For most of my work and for the examples later in this book, I create immutable sets from lists and the only operation I perform is checking to see if a value is in the set. The following examples in GHCI repl are what you need for the material in this book:

1 *Main> import qualified Data.Set as S

2 *Main S> let testSet = S.fromList ["cat","dog","bird"]

3 *Main S> :t testSet

4 testSet :: S.Set [Char]

5 *Main S> S.member "bird" testSet

6 True

7 *Main S> S.member "snake" testSet

8 False

Sets and Maps are immutable so I find creating maps using a lists of key-value tuples and creating sets using lists is fine. That said, coming from the mutable Java, Ruby, Python, and Lisp programming languages, it took me a while to get used to immutability in Haskell.

More on Functions

In this section we will review what you have learned so far about Haskell functions and then look at a few more complex examples.

We have been defining and using simple functions and we have seen that operators behave like infix functions. We can make operators act as prefix functions by wrapping them in parenthesis:

*Main> 10 + 1

11

*Main> (+) 10 1

11

and we can make functions act as infix operators:

*Main> div 100 9

11

*Main> 100 `div` 9

11

This back tick function to operator syntax works with functions we write also:

*Main> let myAdd a b = a + b

*Main> :t myAdd

myAdd :: Num a => a -> a -> a

*Main> myAdd 1 2

3

*Main> 1 `myAdd` 2

3

Because we are working in a GHCi repl, in line 1 we use let to define the function myAdd. If you defined this function in a file and then loaded it, you would not use a let.

In the map examples where we applied a function to a list of values, so far we have used functions that map input values to the same return type, like this (using both partial function evaluation and anonymous inline function):

*Main> map (* 2) [5,6]

[10,12]

*Main> map (\x -> 2 * x) [5,6]

[10,12]

We can also map to different types; in this example we map from a list of Num values to a list containing sub-lists of Num values:

1 *Main> let makeList n = [0..n]

2 *Main> makeList 3

3 [0,1,2,3]

4 *Main> map makeList [2,3,4]

5 [[0,1,2],[0,1,2,3],[0,1,2,3,4]]

As usual, I recommend that when you work in a GHCi repl you check the types of functions and values you are working with:

1 *Main> :t makeList

2 makeList :: (Enum t, Num t) => t -> [t]

3 *Main> :t [1,2]

4 [1,2] :: Num t => [t]

5 *Main> :t [[0,1,2],[0,1,2,3],[0,1,2,3,4]]

6 [[0,1,2],[0,1,2,3],[0,1,2,3,4]] :: Num t => [[t]]

7 *Main>

In line 2 we see that for any type t the function signature is t -> [t] where the compiler determines that t is constrained to be a Num or Enum by examining how the input variable is used as a range parameter for constructing a list. Let’s make a new function that works on any type:

1 *Main> let make3 x = [x,x,x]

2 *Main> :t make3

3 make3 :: t -> [t]

4 *Main> :t make3 "abc"

5 make3 "abc" :: [[Char]]

6 *Main> make3 "abc"

7 ["abc","abc","abc"]

8 *Main> make3 7.1

9 [7.1,7.1,7.1]

10 *Main> :t make3 7.1

11 make3 7.1 :: Fractional t => [t]

Notice in line 3 that the function make3 takes any type of input and returns a list of elements the same type as the input. We used makes3 both with a string argument and a fractional (floating point) number) argument.

Comments on Dealing With Immutable Data and How to Structure Programs

If you program in other programming languages that use mutable data then expect some feelings of disorientation initially when starting to use Haskell. It is common in other languages to maintain the state of a computation in an object and to mutate the value(s) in that object. While I cover mutable state in the next chapter the common pattern in Haskell is to create a data structure (we will use lists in examples here) and pass it to functions that return a new modified copy of the data structure as the returned value from the function. It is very common to keep passing the modified new copy of a data structure through a series of function calls. This may seem cumbersome when you are starting to use Haskell but quickly feels natural.

The following example shows a simple case where a list is constructed in the function main and passed through two functions doubleOddElements and times10Elements:

1 module ChainedCalls where

2

3 doubleOddElements =

4 map (\x -> if x `mod` 2 == 0 then x else 2 * x)

5

6 times10Elements = map (* 10)

7

8 main = do

9 print $ doubleOddElements [0,1,2,3,4,5,6,7,8]

10 let aList = [0,1,2,3,4,5]

11 let newList = times10Elements $ doubleOddElements aList

12 print newList

13 let newList2 = (times10Elements . doubleOddElements) aList

14 print newList2

Notice that the expressions being evaluated in lines 11 and 13 are the same. In line 11 we are applying function doubleOddElements to the value of aList and passing this value to the outer function times10Elements. In line 13 we are creating a new function from composing two existing functions: times10Elements . doubleOddElements. The parenthesis in line 13 are required because the . operator has lower precedence than the application of function doubleOddElements so without the parenthesis line 13 would evaluate as times10Elements (doubleOddElements aList) which is not what I intended and would throw an error.

The output is:

1 haskell_tutorial_cookbook_examples/Pure$ stack ghci

2 *Main> :l ChainedCalls.hs

3 [1 of 1] Compiling ChainedCalls ( ChainedCalls.hs, interpreted )

4 Ok, modules loaded: ChainedCalls.

5 *ChainedCalls> main

6 [0,2,2,6,4,10,6,14,8]

7 [0,20,20,60,40,100]

8 [0,20,20,60,40,100]

Using immutable data takes some getting used to. I am going to digress for a minute to talk about working with Haskell. The steps I take when writing new Haskell code are:

- Be sure I understand the problem

- How will data be represented - in Haskell I prefer using built-in types when possible

- Determine which Haskell standard functions, modules, and 3rd party modules might be useful

- Write and test the pure Haskell functions I think that I need for the application

- Write an impure main function that fetches required data, calls the pure functions (which are no longer pure in the sense they are called from impure code), and saves the processed data.

I am showing you many tiny examples but please keep in mind the entire process of writing longer programs.

Error Handling

We have seen examples of handling soft errors when no value can be calculated: use Maybe, Just, and Nothing. In bug free pure Haskell code, runtime exceptions should be very rare and I usually do not try to trap them.

Using Maybe, Just, and Nothing is much better than, for example, throwing an error using the standard function error:

*Main> error "test error 123"

*** Exception: test error 123

and then, in impure code catching the errors, here is the documentation for your reference.

In impure code that performs IO or accesses network resources that could possibly run out of memory, etc., runtime errors can occur and you could use the same try catch coding style that you have probably used in other programming languages. I admit this is my personal coding style but I don’t like to catch runtime errors. I spent a long time writing Java applications and when possible I preferred using uncaught exceptions and I usually do the same when writing impure Haskell code.

Because of Haskell’s type safety and excellent testing tools, it is possible to write nearly error free Haskell code. Later when we perform network IO we will rely on library support to handle errors and timeouts in a clean “Haskell like” way.

Testing Haskell Code

The example in this section is found in the directory haskell_tutorial_cookbook_examples/TestingHaskell.

If you use stack to create a new project then the framework for testing is generated for you:

cd TestingHaskell

$ ls -R

LICENSE app test

Setup.hs src

TestingHaskell.cabal stack.yaml

TestingHaskell//app:

Main.hs

TestingHaskell//src:

Lib.hs

TestingHaskell//test:

Spec.hs

$ cat test/Spec.hs

main :: IO ()

main = putStrLn "Test suite not yet implemented"

$ stack setup

$ stack build

This stack generated project is more complex than the project I created manually in the directory haskell_tutorial_cookbook_examples/Pure. The file Setup.hs is a placeholder and uses any module named Main in the app directory. This module, defined in app/Main.hs, imports the module Lib defined in src/Lib.hs.

The generated test does not do anything, but let’s run it anyway:

test

Registering TestingHaskell-0.1.0.0...

TestingHaskell-0.1.0.0: test (suite: TestingHaskell-test)

Progress: 1/2 Test suite not yet implemented

Completed 2 action(s).

In the generated project, I made a few changes:

- removed src/Lib.hs

- added src/MyColors.hs providing the type MyColors that we defined earlier

- modified app/Main.hs to use the MyColors type

- added tests to test/Spec.hs

Here is the contents of TestingHaskell/src/MyColors.hs:

module MyColors where

data MyColors = Orange | Red | Blue | Green | Silver

deriving (Show, Eq)

instance Ord MyColors where

compare c1 c2 = compare (show c1) (show c2)

And the new test/Spec.hs file:

1 import Test.Hspec

2

3 import MyColors

4

5 main :: IO ()

6 main = hspec spec

7

8 spec :: Spec

9 spec = do

10 describe "head" $ do

11 it "test removing first list element" $ do

12 head [1,2,3,4] `shouldBe` 1

13 head ["the", "dog", "ran"] `shouldBe` "dog" -- should fail

14 describe "MyColors tests" $ do

15 it "test custom 'compare' function" $ do

16 MyColors.Green < MyColors.Red `shouldBe` True

17 Red > Silver `shouldBe` True -- should fail

Notice how two of the tests are meant to fail as an example. Let’s run the tests:

1 $ stack test

2 TestingHaskell-0.1.0.0: test (suite: TestingHaskell-test)

3

4 Progress: 1/2

5 head

6 test removing first list element FAILED [1]

7 MyColors tests

8 test custom 'compare' function FAILED [2]

9

10 Failures:

11

12 test/Spec.hs:13:

13 1) head test removing first list element

14 expected: "dog"

15 but got: "the"

16

17 test/Spec.hs:17:

18 2) MyColors tests test custom 'compare' function

19 expected: True

20 but got: False

21

22 Randomized with seed 1233887367

23

24 Finished in 0.0139 seconds

25 2 examples, 2 failures

26

27 Completed 2 action(s).

28 Test suite failure for package TestingHaskell-0.1.0.0

29 TestingHaskell-test: exited with: ExitFailure 1

30 Logs printed to console

In line one with stack test we are asking stack to run app tests in the subdirectory test. All Haskell source files in subdirectory test are assumed to be test files. In the listing for file test/Spec.hs we have two tests that fail on purpose and you see the output for the failed tests at lines 12-15 and 17-20.

Because the Haskell compiler does such a good job at finding type errors I have fewer errors in my Haskell code compared to languages like Ruby and Common Lisp. As a result I find myself writing fewer tests for my Haskell code than I would write in other languages. Still, I recommend some tests for each of your projects; decide for yourself how much relative effort you want to put into writing tests.

Pure Haskell Wrap Up

I hope you are starting to get an appreciation for using composition of functions and higher order functions to enable us to compose programs from smaller pieces that can be joined together.

This composition is made easier when using pure functions that always return the same value when called with the same type of arguments.

We will continue to see examples of how lazy evaluation simplifies code because we can use infinitely large lists with the assurance that values are not calculated until they are needed.

In addition to Haskell code generally having fewer errors (after it gets by the compiler!) other advantages of functional programming include more concise code that is easy to read and understand once you get some experience with the language.

Tutorial on Impure Haskell Programming

One of the great things about Haskell is that the language encourages us to think of our code in two parts:

- Pure functional code (functions have no side effects) that is easy to write and test. Functional code tends to be shorter and less likely to be imperative (i.e., more functional, using maps and recursion, and less use of loops as in Java or C++).

- Impure code that deals with side effects like file and network IO, maintaining state in a typesafe way, and isolate imperative code that has side effects.

In his excellent Functional Programming with Haskell class at eDX Erik Meijer described pure code as being islands in the ocean and the ocean representing impure code. He says that it is a design decision how much of your code is pure (islands) and how much is impure (the ocean). This model of looking at Haskel programs works for me.

My use the word “impure” is common for refering to Haskell code with side effects. Haskell is a purely functional language and side effects like I/O are best handled in a pure functional way using by wrapping pure values in Mondads.

In addition to showing you reusable examples of impure code that you will likely need in your own programs, a major theme of this chapter is handling impure code in a convenient type safe fashion. Any Monad, which wraps a single value, is used to safely manage state. I will introduce you to using Monad types as required for the examples in this chapter. This tutorial style introduction will prepare you for understanding the sample applications later.

Hello IO () Monad

I showed you many examples of pure code in the last chapter but most examples in source files (as opposed to those shown in a GHCi repl) had a bit of impure code in them: the main function like the following that simply writes a string of characters to standard output:

main = do

print "hello world"

The type of function main is:

*Main> :t main

main :: IO ()

The IO () monad is an IO value wrapped in a type safe way. Because Haskell is a lazy evaluation language, the value is not evaluated until it is used. Every IO () action returns exactly one value. Think of the word “mono” (or “one”) when you think of Monads because they always return one value. Monads are also used to connnect together parts of a program.

What is it about the function main in the last example that makes its type an IO ()? Consider the simple main function here:

module NoIO where

main = do

let i = 1 in

2 * i

and its type:

*Main> :l NoIO

[1 of 1] Compiling NoIO ( NoIO.hs, interpreted )

Ok, modules loaded: NoIO.

*NoIO> main

2

*NoIO> :t main

main :: Integer

*NoIO>

OK, now you see that there is nothing special about a main function: it gets its type from the type of value returned from the function. It is common to have the return type depend on the function argument types. The first example returns a type IO () because it returns a print do expression:

*Main> :t print

print :: Show a => a -> IO ()

*Main> :t putStrLn

putStrLn :: String -> IO ()

The function print shows the enclosing quote characters when displaying a string while putStrLn does not. In the first example, what happens when we stitch together several expressions that have type IO ()? Consider:

main = do

print 1

print "cat"

Function main is still of type IO (). You have seen do expressions frequently in examples and now we will dig into what the do expression is and why we use it.

The do notation makes working with monads easier. There are alternatives to using do that we will look at later.

One thing to note is that if you are doing bindings inside a do expression using a let with a in expression, you need to wrap the bindings in a new (inner) do expression if there is more than one line of code following the let statement. The way to avoid requiring a nested do expression is to not use in in a let expression inside a do block of code. Yes, this sounds complicated but let’s clear up any confusion by looking at the examples found in the file ImPure/DoLetExample.hs (you might also want to look at the similar example file ImPure/DoLetExample2.hs that uses bind operators instead of a do statement; we will look at bind operators in the next section):

module DoLetExample where

example1 = do -- good style

putStrLn "Enter an integer number:"

s <- getLine

let number = (read s :: Int) + 2

putStrLn $ "Number plus 2 = " ++ (show number)

example2 = do -- avoid using "in" inside a do statement

putStrLn "Enter an integer number:"

s <- getLine

let number = (read s :: Int) + 2 in